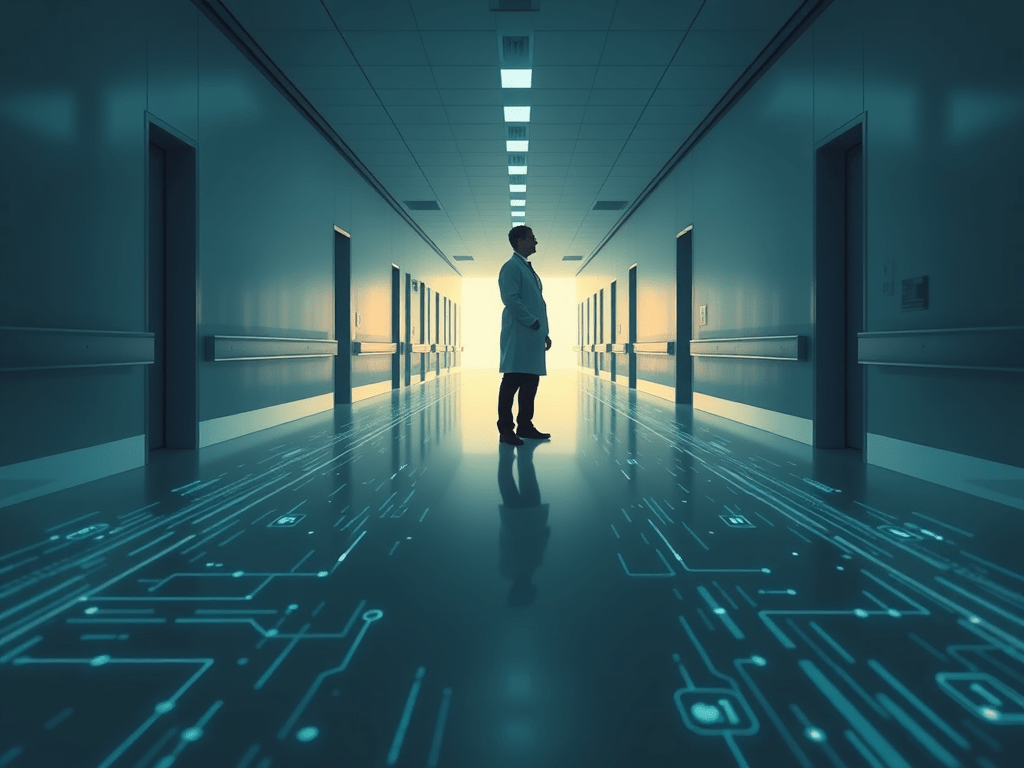

As algorithms seep into exam rooms, they aren’t taking over medicine so much as redefining what “good judgment” looks like—and who gets to decide.

By CHARCHER MOGUCHE

The loudest fears about artificial intelligence in medicine tend to fixate on replacement: machines diagnosing disease, robots delivering care, doctors edged out by software. But inside hospitals, clinics, and training programs, the real transformation is subtler and far more advanced. AI is not replacing doctors. It is changing how they think.

Across the U.S. health care system, algorithms increasingly shape clinical decisions—often without dramatic confrontation or explicit handoff of authority. Risk scores flag patients as “high” or “low” priority. Predictive tools estimate who might deteriorate overnight. Diagnostic prompts quietly steer attention toward certain explanations and away from others. Physicians remain in charge, at least formally. But the terrain on which they exercise judgment is increasingly mapped by machines.

The Quiet Shift No One Is Watching

This shift matters because clinical judgment is the core of medicine’s professional identity. It is where expertise, experience, ethics, and uncertainty converge. As AI systems define what counts as reasonable risk, appropriate intervention, or acceptable deviation, they are not just assisting clinicians—they are reshaping the standards by which decisions are made, evaluated, and defended. The question is no longer whether doctors are “in the loop,” but whether their judgment is being reengineered, quietly and at scale.

When Algorithms Define “Reasonable” Care

Modern medicine is already saturated with data. Electronic health records generate alerts for sepsis, stroke, falls, and readmission. Machine-learning models scan imaging studies and flag abnormalities. Triage systems rank patients by predicted risk, subtly ordering attention before clinicians even enter the room. These tools are usually described as decision support, not decision-making. Yet in practice, their influence can be difficult to ignore.

“If the system labels someone low risk, overriding that feels like you’re sticking your neck out,” said one hospital physician who works with AI-assisted triage tools. “Even if your instincts disagree, you know the algorithm will be part of how the case is judged later.”

That dynamic reflects a well-documented phenomenon known as automation bias: the tendency for humans to over-trust automated systems, especially in complex, high-stakes environments. In health care, where uncertainty is constant and liability looms large, algorithmic recommendations can feel less like optional advice and more like a baseline for defensible care.

Learning Medicine in an Algorithmic World

Over time, clinicians may internalize those baselines. The algorithm’s output becomes not merely another data point, but a reference for what a “reasonable doctor” would do. Deviating from it requires not just clinical confidence, but institutional courage.

Medical training increasingly reinforces this pattern. Students and residents now learn in environments where AI tools are embedded into routine practice. Diagnostic prompts, predictive analytics, and automated alerts are not novelties; they are infrastructure. New clinicians may never experience practicing medicine without algorithmic guidance shaping their attention and priorities.

This does not mean young doctors are less capable. But it does mean their intuition develops in dialogue with systems whose assumptions are often opaque. Many AI models are trained on historical health data that reflect existing inequalities in diagnosis, treatment, and access to care. When those models guide present decisions, they risk encoding yesterday’s biases into today’s standards.

Why Doctors Defer—even When They’re Unsure

Questioning an algorithm is structurally harder than questioning a colleague. AI systems rarely explain themselves in ways clinicians can interrogate. Their reasoning is buried in statistical correlations, proprietary code, or black-box architectures. When an alert fires or a risk score appears, the underlying logic is usually inaccessible.

The consequences become clearest when things go wrong. In several documented cases, clinicians have deferred to algorithmic assessments that underestimated patient risk, delaying treatment with serious consequences. In others, alert systems generated so many warnings that clinicians learned to ignore them, sometimes missing genuine emergencies as a result.

What Happens When the System Gets It Wrong

These failures expose a deeper problem of accountability. If a doctor follows an algorithmic recommendation that leads to harm, who is responsible? The clinician who clicked “accept”? The hospital that deployed the system? The vendor that built the model? Regulators have yet to provide clear answers, leaving responsibility fragmented and ambiguous.

In that uncertainty, many clinicians choose the safest legal posture: alignment with the system. Overriding an algorithm may be clinically justified, but it can feel professionally risky—especially when electronic records meticulously log every deviation. The question becomes not only “Was this right for the patient?” but “How will this look afterward?”

The Illusion of Keeping Humans “In the Loop”

Hospitals often reassure the public that humans remain “in the loop.” Doctors retain final authority, and algorithms merely assist. Technically, this is true. But autonomy requires more than the ability to click override. It requires meaningful freedom to disagree without penalty. When algorithms define defaults, shape time pressure, and anchor institutional expectations, that freedom quietly narrows.

Medicine has always involved judgment under uncertainty. But judgment is not purely technical. It includes moral reasoning, contextual awareness, and responsiveness to patients’ values—qualities that resist easy quantification. When AI systems privilege what can be measured and predicted, they may marginalize what cannot.

How AI Quietly Shapes Clinical Judgment

• Risk Scores: Label patients as “high” or “low” priority, influencing attention before examination

• Default Pathways: Recommend standardized responses that discourage deviation

• Documentation Pressure: Record overrides in ways that heighten legal exposure

• Opacity: Limit clinicians’ ability to interrogate or challenge recommendations

• Normalization: Redefine what counts as “reasonable” care over time

Who Benefits From Algorithmic Medicine?

Ultimately, the most urgent question is not whether doctors remain in control, but whose interests these systems serve. Many AI tools are built by private companies, trained on patient data, and deployed in institutions under pressure to cut costs and standardize care. Their priorities—efficiency, scalability, risk reduction—do not always align with the messy realities of human illness.

Doctors may not be replaced by machines, but their judgment is being quietly reengineered—and medicine has yet to decide whether that future is one it actually wants.

Without transparent oversight, algorithmic judgment becomes a form of soft governance: influencing decisions without explicit mandates, shaping behavior without public debate. The transformation is subtle enough to escape panic, but profound enough to reshape medicine’s ethical core.

The Future of Medical Judgment

As AI adoption accelerates faster than professional norms and regulation can adapt, medicine faces a choice. It can treat these systems as neutral tools—or confront them as forces that reshape authority, responsibility, and care itself. The future of medical judgment depends on noticing the change while there is still time to shape it.

Leave a comment