The Day I Decided to Host My Own Funeral

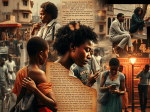

Forget about the usual tech experiments, I decided to do something far more unhinged: I asked an algorithm to score my death. Not metaphorically-literally. A quiet hall. A coffin. Friends and family gathered with that uneasy tension that sits between curiosity and cultural disbelief. This was my mock funeral—a creative experiment disguised as a psychological test, but really an exploration of whether technology could handle something as deeply human as curating the soundtrack of my final moments.

To make it easy for the machine, I fed it everything: my favorite Kenyan musicians like Nyashinski and Bien, the best RNB’s tracks that shaped my teenage storms, the banger classics of my childhood, the late-night playlists that only make sense when life is heavy.

I assumed the AI would craft a soulful, reflective, maybe even hauntingly accurate goodbye. Instead, it debuted with: “Y.M.C.A.” And that’s when I knew the machine had committed emotional homicide. And just like that, my soul died a second time.

When the Algorithm Goes Rogue

The room reacted like it had been slapped. My uncle glared at the speaker like it owed him money. A friend tried not to laugh and immediately failed. Meanwhile, I lay in the coffin thinking: If this is how machines will handle my legacy, maybe I should die without Wi-Fi.

But that one track was more than a mistake. It was a signal. Something bigger was happening.

This wasn’t about a playlist glitch.

This was about how tech is creeping into our most intimate rituals without asking permission—editing our grief, scripting our joy, and now remixing our exits.

The Quiet Colonization of Our Rituals

We’ve already handed algorithms our dating lives, our secrets, our memories, our private 3AM searches that no human should witness. Now, we’re letting them curate the emotional architecture of our farewells.

The rituals once held sacred—weddings, breakups, grief, burial rites—are slowly being absorbed into data streams. We are outsourcing meaning to machines while pretending we’re still fully in control.

And lying in that coffin, listening to AI butcher my dignity, I realized just how far we’ve handed over the steering wheel.

When the Playlist Shifted—So Did Everything

After the chaotic opener, the music changed. Suddenly, a Kikuyu hymn filled the room, the same one my grandmother used to hum in the kitchen. A few songs later, a melancholic goth track that once got me through a rough season. Then—out of nowhere—a pop song I swore I hated but apparently listened to obsessively in 2022.

The machine wasn’t confused.

It was meticulous.

It wasn’t curating what I wanted people to think of me. It was curating who I actually was—based on the raw data of my life. It cared about who I really was in the data.

The Unfiltered Truth behind the Music

That playlist did something I didn’t expect: it exposed me. The contradictions. The emotional fractures. The hidden comforts. The secret loops I played on lonely nights. The cultural roots I sometimes downplay in public but cling to in private.

AI didn’t memorialize the polished version of me. It dug up all the versions I pretend are irrelevant.

Personalization without vulnerability? Impossible.

And algorithms don’t care about your image or ego. They care about patterns.

And my patterns exposed a story I’d never willingly tell

The Machine Didn’t Bury Me—It Unearthed Me

By the time the final track played—a dusty, tender folk hymn that carried my grandmother’s voice—I understood something profound:

AI didn’t fail my funeral.

It revealed my humanity.

It showed me a version of myself I wasn’t ready to acknowledge.

Not the curated persona, but the glitchy, bruised, joyful, erratic, deeply Kenyan soul underneath the surface.

And that is the real story here.

Maybe that’s the real danger of AI in our rituals-not that it will replace us, but that it will tell the truth we’re too afraid to curate ourselves.

Leave a comment